RagaAI LLM Hub Streamlines LLM Testing for Developers

RagaAI introduces the “LLM Hub,” an open-source platform with over 100 metrics to regulate Large Language Models (LLMs) and prevent failures.

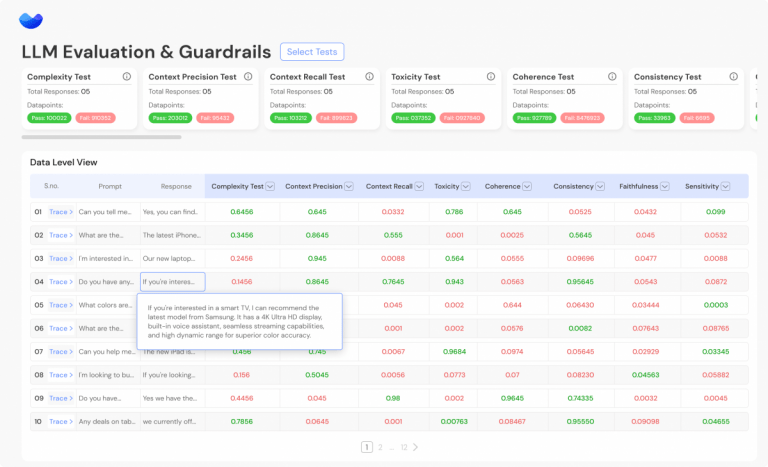

Artificial intelligence platform for testing A new open-source and enterprise-ready platform called “RagaAI LLM Hub” has been announced by RagaAI. Its purpose is to evaluate and set limits for LLMs. The platform’s goal is to avoid disastrous failures in LLMs and RAG applications, and it does this with more than 100 carefully designed metrics.

Organizations and developers can take advantage of the RagaAI LLM Hub’s comprehensive toolbox to evaluate and compare LLMs. It covers important areas like Vulnerability screening, Context Relevance, Safety & Bias, Hallucination, Content Quality, and Relevance & Understanding. Furthermore, it offers a set of Metric-Based Tests that can be used for quantitative analysis.

As data scientists and businesses discover their ideal technology stack, holistic evaluation of LLMs is becoming increasingly important in the LLM construction world. The inventor of RagaAI, Gaurav Agarwal, told reporters that there are hundreds of potential root causes for any given problem, and that in order to diagnose the problem accurately, it is necessary to employ hundreds of metrics to narrow down the search.

“RagaAI LLM Hub’s comprehensive testing capabilities greatly enhance a developer’s workflow. They eliminate ad hoc analysis, which saves crucial time, and accelerate LLM development by a factor of three.”

Revolutionizing approaches to assuring dependability and trustworthiness, the RagaAI LLM Hub identifies underlying problems within LLM applications and supports their resolution at the source. It is designed to handle issues throughout the LLM lifecycle, from proof-of-concept to production applications.

RagaAI states that their LLM Hub enables this capability by administering a battery of exams that address different facets of decision-making:

Iteratively identifying optimal prompt templates and putting guardrails in place to mitigate adversarial attempts are the main features of the prompts.

With the help of Context Management for RAGs, customers are able to strike a balance between LLM performance, cost/latency, and scalability.

Response Generation: It safeguards against bias, PII leakage, and hallucinations in LLM responses by using metrics and putting safeguards in place.

Using LLM Diagnosis to Reduce AI Bias and Hallucinations

Chatbots, article production, text summarization, and source code generation are just a few of the many uses for the RagaAI LLM Hub, which serves developers and businesses in sectors as diverse as e-commerce, finance, marketing, law, and healthcare.

When it comes to sensitive industries like healthcare, law, and finance, the RagaAI LLM Hub goes above and beyond evaluation to help establish safeguards to guarantee data protection and legal compliance, all while advocating for responsible and ethical AI techniques.

“One of our e-commerce clients was having trouble with a chatbot that was employing LLMs to provide accurate responses to customer service inquiries. According to RagaAI’s Gaurav Agarwal, who spoke with the media, the problem was identified and fixed using the technology. Protecting the privacy of health insurance policyholders is a top priority. A major data privacy issue occurred when one of our clients had some of their most sensitive personal information shared with a third party. This and related problems were identified and prevented in real time using the guardrails of the RagaAI LLM Hub.

Following established social norms and values is another way it hopes to lessen the impact on reputation.

RagaAI is useful for detecting personally identifiable information (PII) in LLM responses and defining parameters to prevent unauthorized access. This is essential to Responsible AI and guarantees that the LLM application will never leak any personal data from internal papers, as Gaurav Agarwal said. To protect themselves from social and reputational damage, businesses must adhere to certain guidelines, such as providing honest and objective responses, refraining from discussing rival companies, and eliminating any mention of material non-public information (MNPI).

The RagaAI LLM Hub was launched in January 2024, following a successful $4.7 million seed investment round headed by pi Ventures. The round allowed the company to extend its AI research and development efforts, as well as its customer base in the US and Europe.

We want to offer top-notch technologies that will make LLMs dependable and trustworthy. The company is pouring a lot of money on developing important technology to deal with LLM quality assurance issues. In an effort to make this technology accessible to everyone, we are making it open-source so that the developer community may build on top of the best solution currently available,” explained Gaurav Agarwal.