Virginia Tech Uncovers Geographic Biases in ChatGPT’s Environmental Insights

Virginia Tech, a university located in the United States, has recently released a paper that detailed the possible biases that may be present in the artificial intelligence (AI) tool known as ChatGPT. The report also suggested that the tool’s results on environmental justice issues may vary from one county to another.

Researchers from Virginia Tech have asserted in the report that ChatGPT is unable to provide information that is specific to a particular region in relation to environmental justice issues. Nevertheless, the research project discovered a pattern that suggested that the information was more easily accessible to the states that were larger and had a higher population density.

“In states with larger urban populations such as Delaware or California, fewer than 1 percent of the population lived in counties that cannot receive specific information.”

When this was going on, regions with fewer populations did not have access to the same resources. According to the survey, more than ninety percent of the inhabitants of rural states like Idaho and New Hampshire lived in counties that were unable to obtain information that was specific to their area.

Furthermore, a lecturer named Kim from the Department of Geography at Virginia Tech emphasized the need for additional research due to the increasing frequency of identified prejudices. Kim made the following declaration.

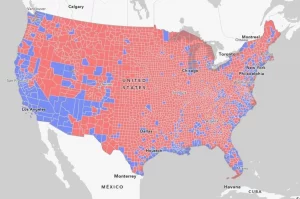

“Our findings reveal that geographic biases currently exist in the ChatGPT model,” despite the fact that additional research is required. In addition, the research paper included a map illustrating the extent to which the population in the United States lacks access to location-specific information on environmental justice issues.

A United States map showing areas where residents can view (blue) or cannot view (red) local-specific information on environmental justice issues. Source: Virginia Tech

Following recent reports that academics are uncovering possible political biases displayed by ChatGPT in recent times, this comes as a surprising development.